From large language models like ChatGPT to autonomous vehicles, generative AI, and high-resolution video analytics, AI is transforming nearly every industry at breakneck speed. But behind every intelligent model lies a hidden challenge — the ability to feed massive amounts of data into these AI systems quickly and reliably.

As AI models grow from millions to trillions of parameters, the compute demand scales exponentially. However, it’s not just the processors that are under pressure — data input/output (I/O) becomes the new bottleneck. AI accelerators can’t work in isolation; they need constant data streams to keep up. And that’s where PCIe steps in — the silent enabler of AI acceleration, acting as the high-speed data highway that feeds the AI “brain.”

Why AI Needs More Than Just Compute Power

While much attention is given to the number of TOPS (trillions of operations per second) a chip can deliver, AI performance also depends heavily on I/O throughput. Compute units can only perform as well as the data they receive — and fast.

Take autonomous vehicles, for instance. These systems ingest real-time data from high-resolution cameras, LiDARs, radars, and IMUs. AI accelerators must process this data instantly to make critical driving decisions. However, if data transfer from the sensors to the AI processor is delayed due to bandwidth limits, the whole system stalls — regardless of how powerful the AI chip is.

This dual pressure — compute and I/O — defines the next generation of AI system architecture.

Enter PCIe: The Data Superhighway of AI

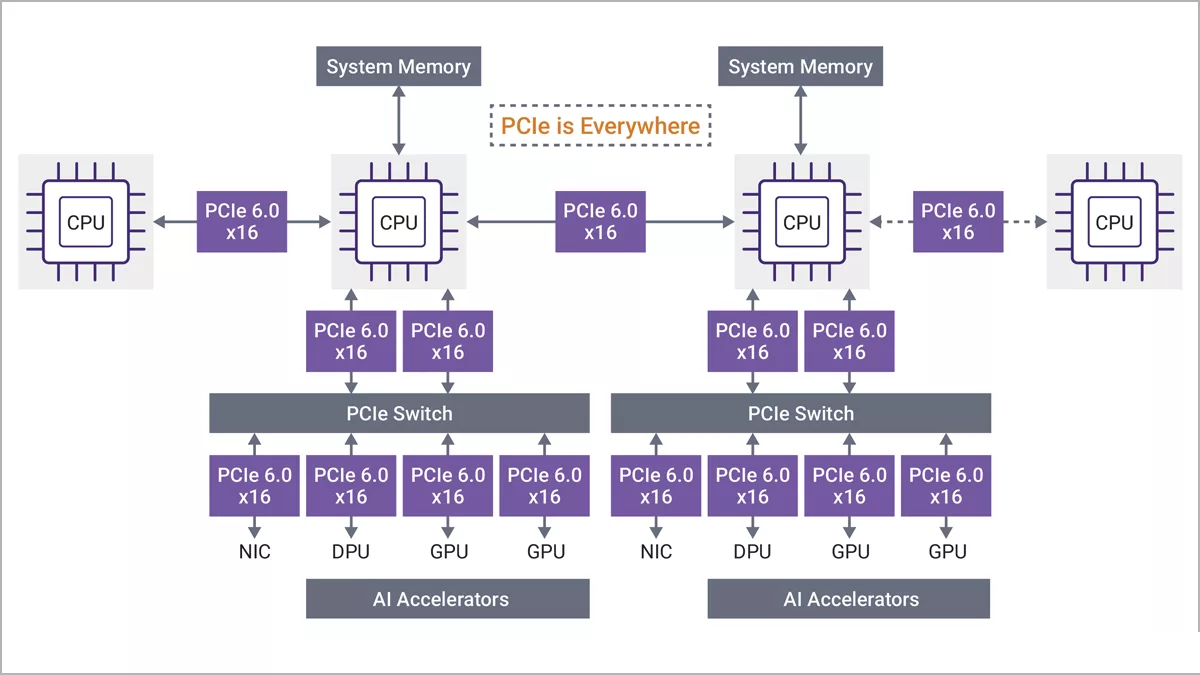

Peripheral Component Interconnect Express (PCIe) is the backbone of high-speed data transfer in modern computing systems. Originally designed to connect peripherals like graphics cards and storage devices, PCIe has now become essential in connecting AI accelerators to CPUs, memory, and each other.

Its key strengths:

- High Bandwidth: PCIe 6.0 delivers 64 GT/s (GigaTransfers per second) per lane, supporting up to 256 GB/s on a 16-lane (x16) configuration.

- Low Latency: Rapid response times ensure real-time inference and high-throughput training.

- Scalability: Easily connects multiple GPUs, AI cards, or even multiple servers.

- Reliability and Security: With features like Integrity and Data Encryption (IDE), PCIe ensures data is protected in transit — critical for sensitive AI applications.

Demystifying PCIe Bandwidth: A Quick Formula

Let’s break down the raw performance numbers.

For PCIe 6.0:

- Each lane transmits at 64 GT/s.

- A typical AI accelerator might use x16 lanes.

Total Transmission Rate = 64 GT/s × 16 = 1024 GT/s

PCIe 6.0 uses PAM4 (Pulse Amplitude Modulation with 4 levels), effectively halving the data rate per transfer compared to previous NRZ signaling.

Effective Data Rate = 1024 GT/s ÷ 8 = 128 GB/s (unidirectional)

Bidirectional Total Bandwidth = 128 × 2 = 256 GB/s

This speed is essential when moving large model weights, real-time sensor data, or streaming high-definition video to an accelerator for AI processing.

PCIe + AI Accelerators: A Match Made for Performance

AI accelerators come in all shapes and architectures — but they all share one thing in common: the need for fast data movement. Consider:

- NVIDIA GPUs: The workhorse of deep learning training and inference in data centers.

- Hailo-8: A compact, energy-efficient AI processor for edge vision tasks.

- Graphcore IPUs: Designed specifically for AI graph computation with thousands of processing cores.

- Cerebras WSE (Wafer-Scale Engine): An entire wafer used as a single AI chip, delivering unmatched scale.

- Custom Spatial Accelerators: Chips operating in parallel arrays for AI “farms.”

- Geniatech AI Accelerators: Cost-effective, low-power AI modules and box PCs for embedded and edge computing, optimized for real-time inference across diverse industrial applications.

All these architectures rely on high-bandwidth PCIe interfaces. Without enough lanes or sufficient throughput, even the most advanced AI chip becomes underutilized.

Think of PCIe lanes like food court windows — the more windows you open, the faster the customers (data) can be served to the chefs (AI cores). The fewer the lanes, the longer the queue.

The Road Ahead: PCIe 7.0 and Beyond

The next generation of PCIe—possibly called PCIe 7.0—targeting speeds beyond 2 TB/s in total bandwidth. While this may sound excessive today, consider the AI workloads of tomorrow:

- Real-time fusion of LiDAR + video + NLP in autonomous vehicles.

- Simultaneous multimodal AI on edge devices.

- Massive AI model streaming from cloud to on-prem inference clusters.

- Persistent AI feedback loops between storage, CPU, and accelerators.

The growth in data is not slowing down. PCIe must evolve in parallel to support these increasingly data-hungry applications.

Conclusion: No AI Without I/O

AI acceleration isn’t just about compute — it’s about how fast and efficiently you can move data to and from the compute engines. PCIe plays a foundational role in this process, acting as the high-speed vascular system of AI platforms.

Without robust I/O infrastructure, even the smartest AI chip can’t perform. As AI continues to expand into every industry, from healthcare to automotive, PCIe will remain a critical enabler — quietly ensuring that intelligent systems get the data they need, when they need it.