Hot Search

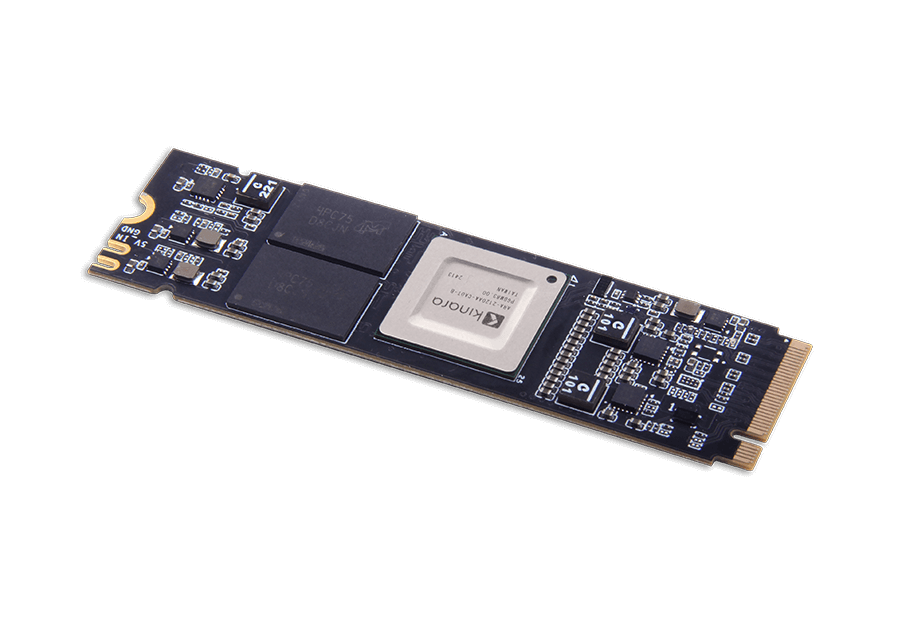

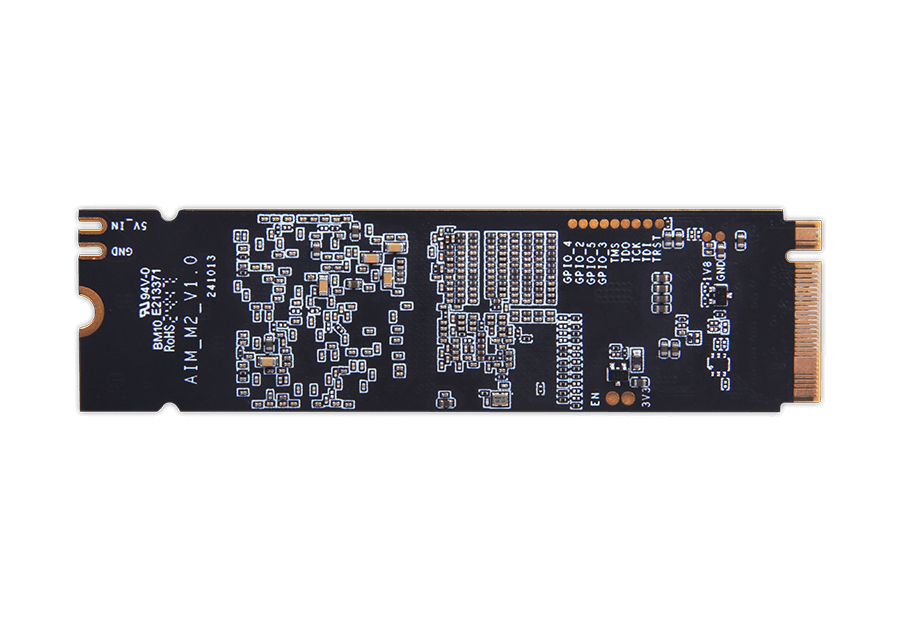

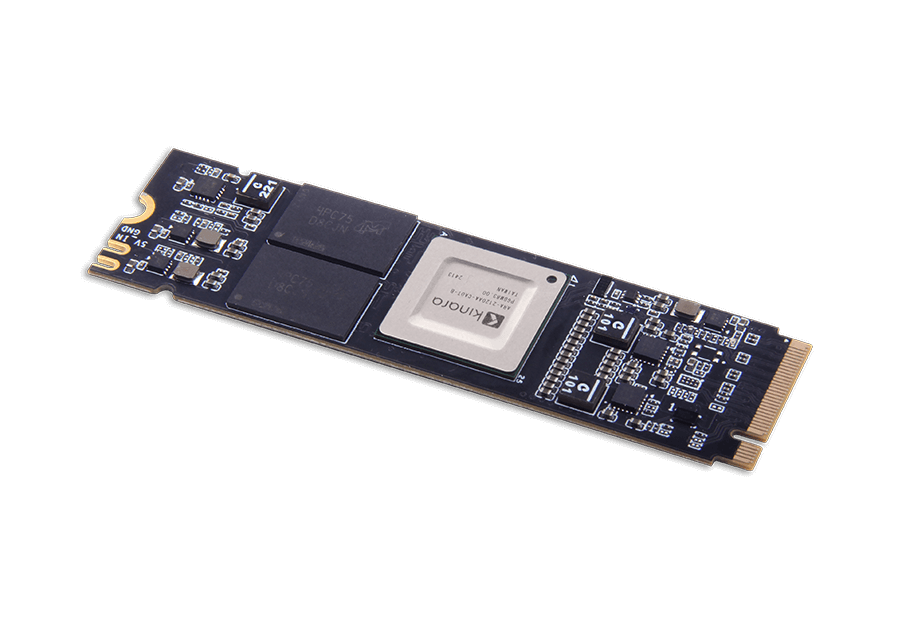

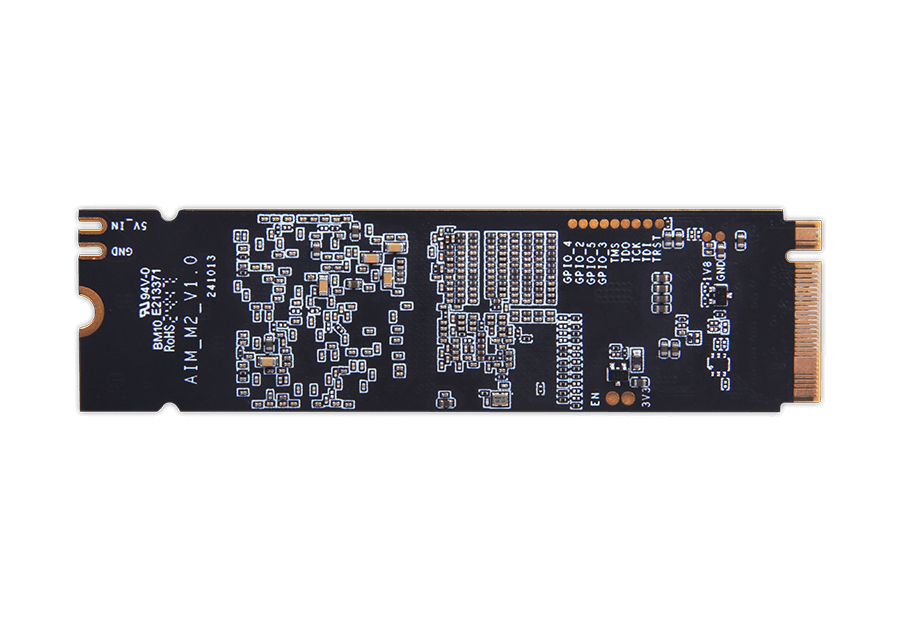

Geniatech AIM-M2 is a cost-effective M.2 AI accelerator powered by the Kinara Ara-2 NPU. Leveraging the standard M.2 interface, it enables seamless integration into edge servers, embedded systems, and industrial PCs. Optimized for transformers and generative AI models, the AIM-M2 supports LLaMA 2.0, YOLOv8, and major AI frameworks such as ONNX, TensorFlow, and PyTorch. It is ideal for edge AI deployment.

Utilize the M.2 interface for plug-and-play evaluation on x86/ARM hosts, enabling AI model demonstration within hours and drastically shortening development cycles.

Delivers up to 40 TOPS INT8 at only 12W, providing an optimal balance of high throughput and power efficiency for edge deployments.

Accelerate deployment with extensive support for TensorFlow, PyTorch, ONNX, and major models like YOLOv8 and Llama 2.0.

Seamlessly integrates with leading host processors from Nvidia, Qualcomm, Xilinx, and NXP via a high-speed PCIe Gen4 x4 interface, ensuring design flexibility.

The integrated module solution significantly reduces total development cost and BOM complexity, delivering faster ROI for volume production.

Features up to 16GB LPDDR4X memory and operates reliably within a 0°C to 70°C range, offering robust performance in industrial environments.

Provides complete hardware modules, drivers, and software toolchains, allowing developers to focus on application innovation rather than hardware design.

Geniatech provides technical collaboration and customization services to accelerate your product development and ensure optimal integration.

| Chip | Kinara Ara-2 |

| AI performance | 40 TOPS, Support llama2.0 and Yolov8 |

| Host Processor | NXP,Nvidia,Qualcomm,Xilinx |

| AI Model Frameworks Supported | TensorFlow,Torchscript, PyTorch&ONNX, Caffe, Mxnet |

| Memory | Up to 16GB LPDDR4(X) |

| Host Interface | M-Key(4-lane PCIE Gen4) |

| OS Support | Linux, Windows |

| Power Consumption(Typical) | 12W |

| Operating Temperature | 0℃~70℃ |

| Dimensions | 22×80mm |