Hot Search

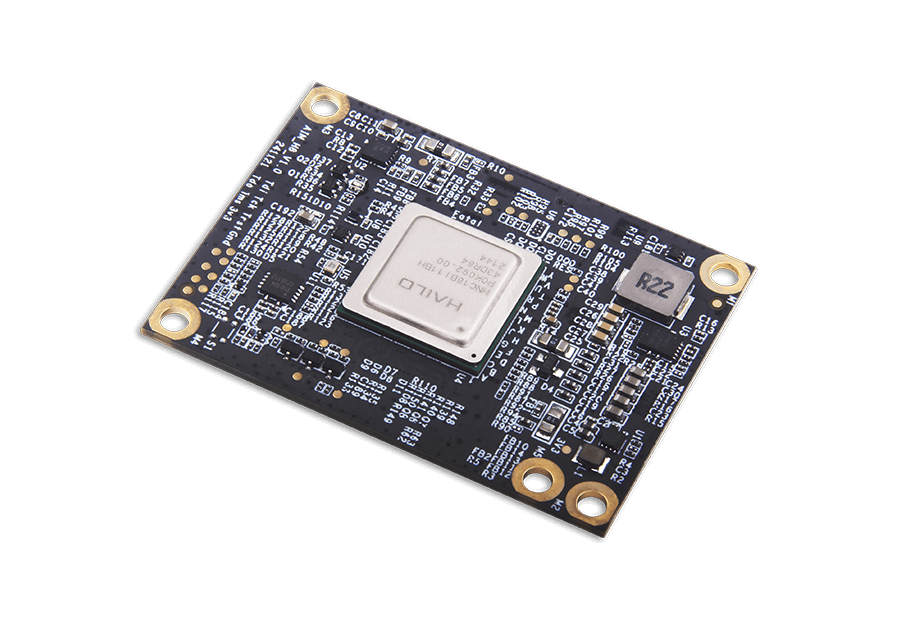

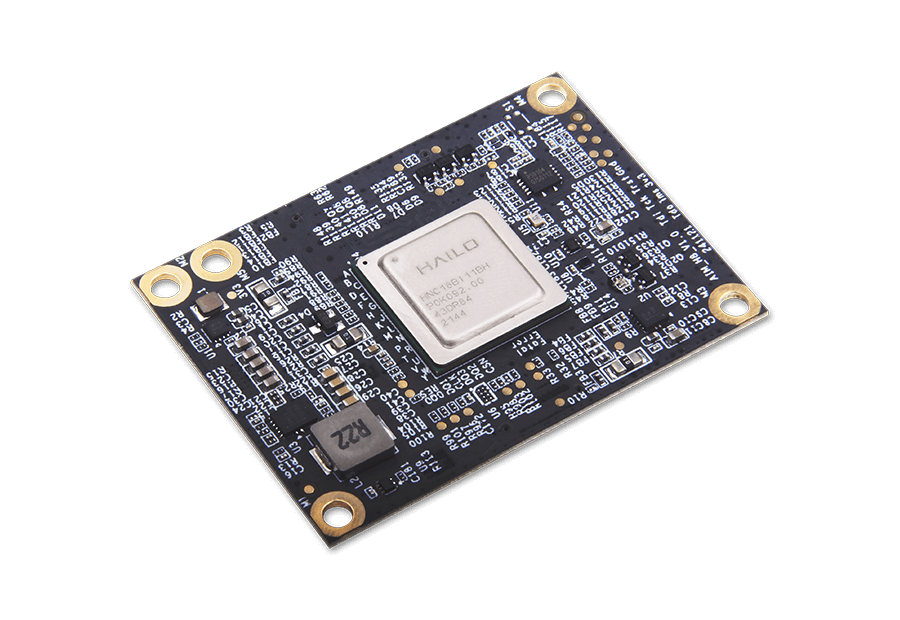

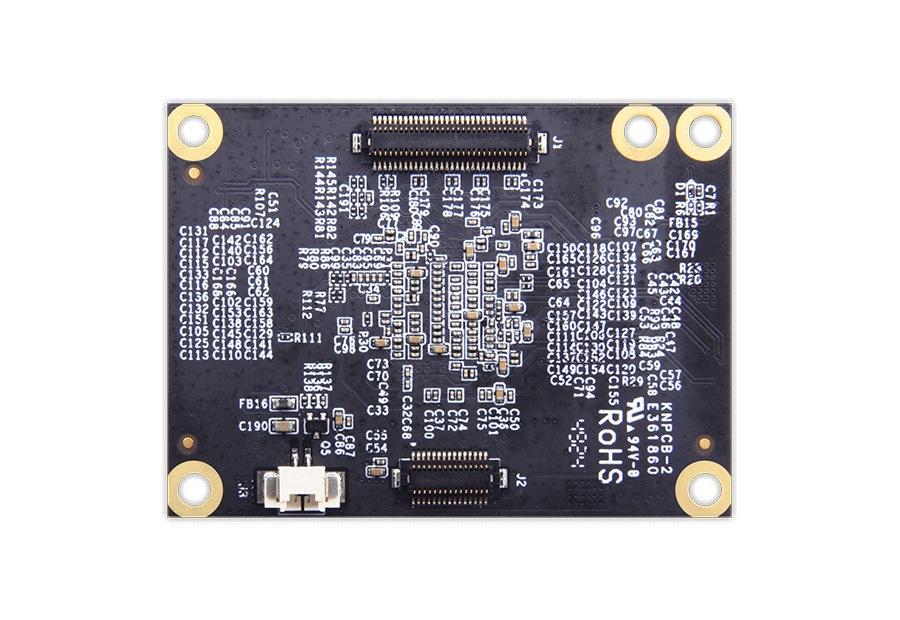

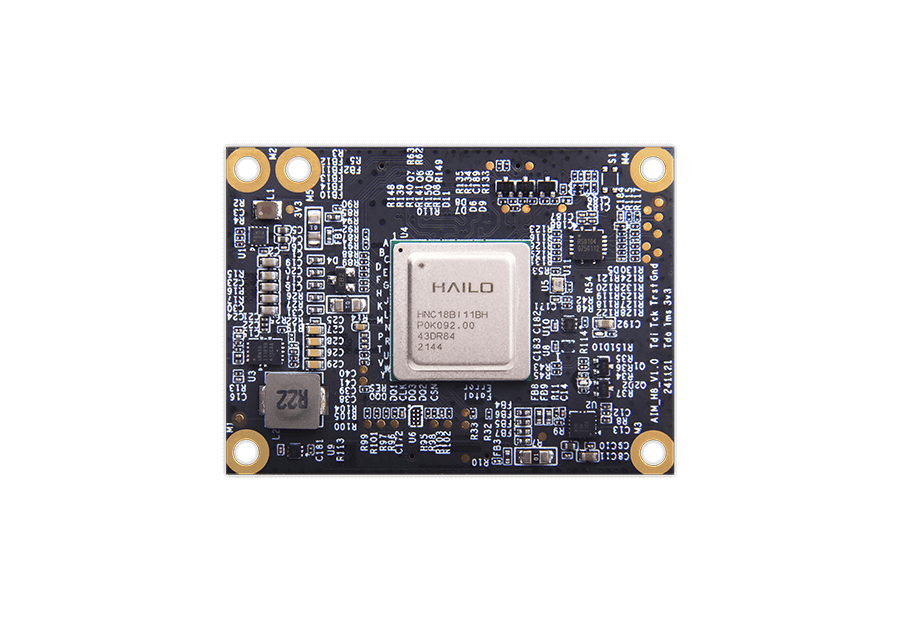

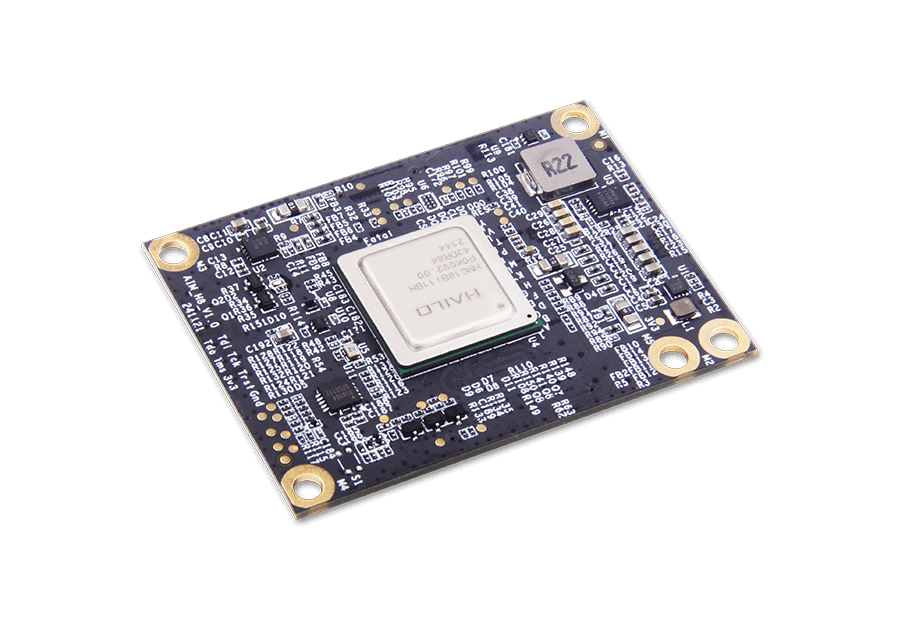

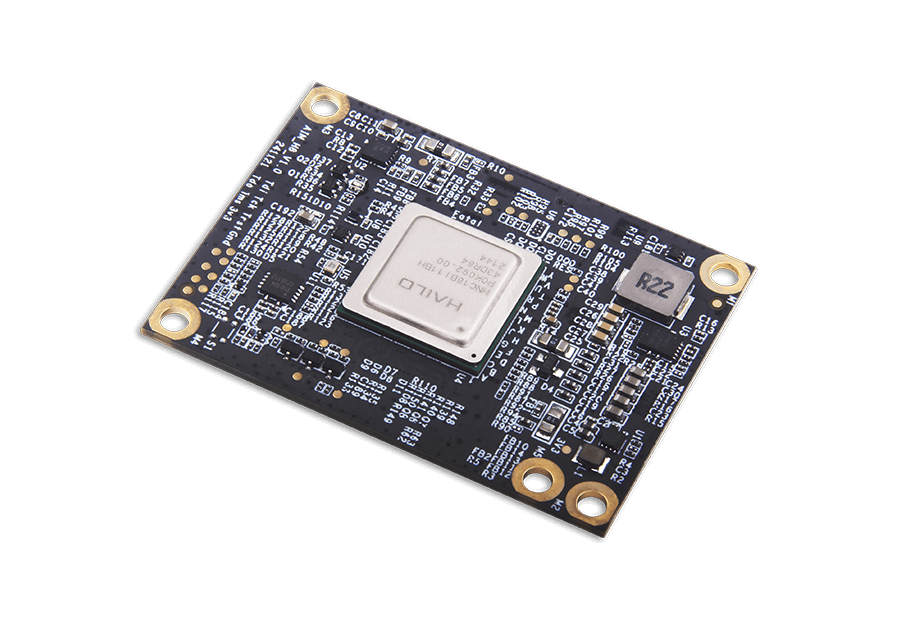

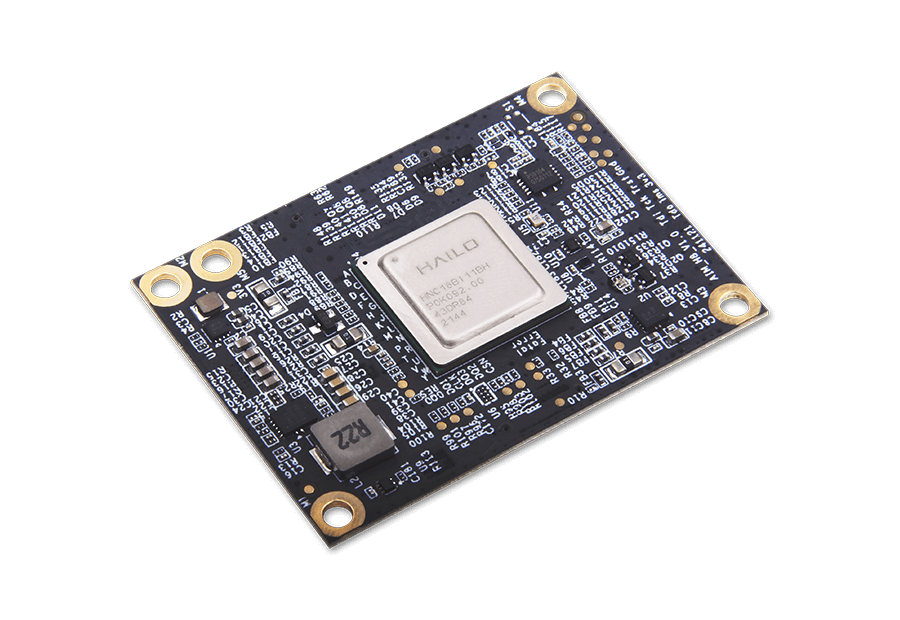

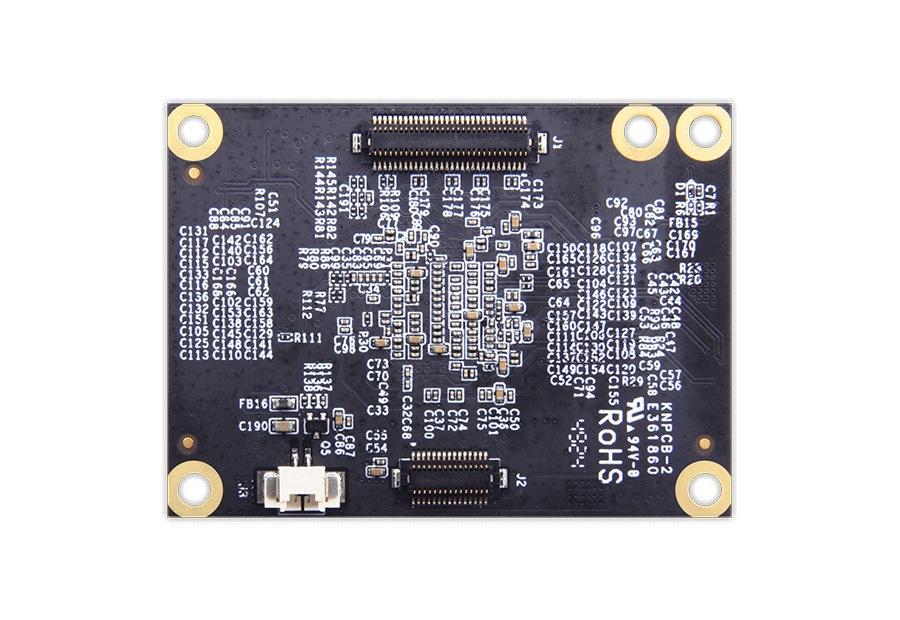

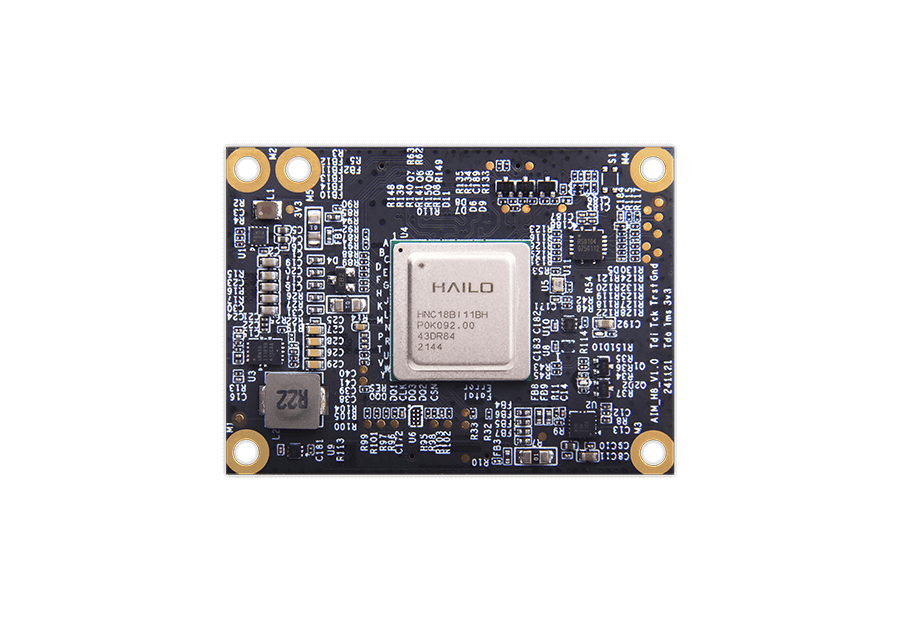

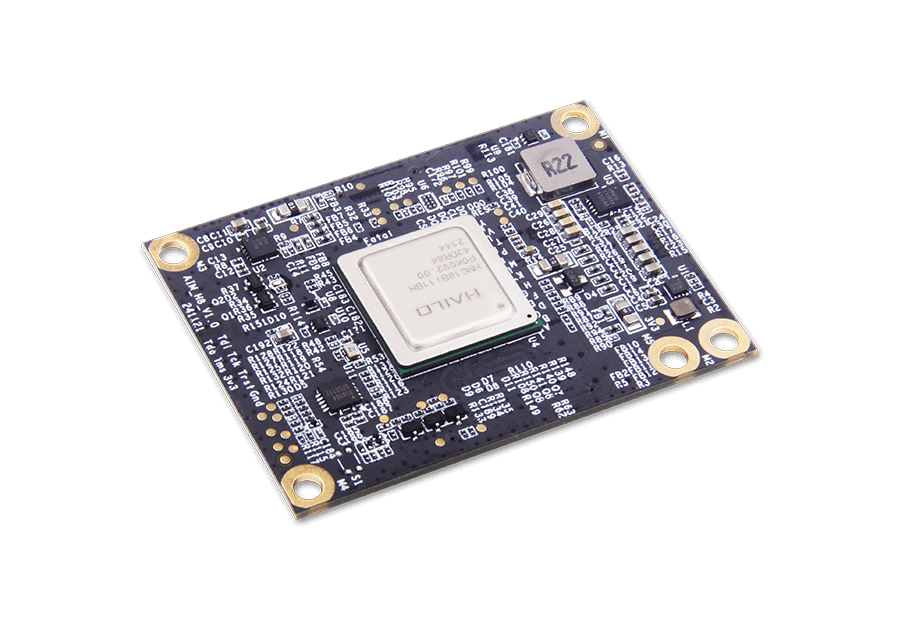

Geniatech AIM-H8 is a compact, power-efficient AI acceleration module based on the Hailo-8 NPU, delivering up to 26 TOPS with a TDP of just 2.5W. It features a Board-to-Board (B2B) connector for flexible integration into custom carrier boards. With ultra-low latency and support for multi-stream inferencing, it enables real-time performance for edge AI computing applications while minimizing power consumption.

This core module enables direct integration into professional designs, significantly shortening development cycles and reducing engineering risk.

Delivers 26 TOPS of dedicated INT8 performance for demanding edge workloads like real-time computer vision and sensor fusion.

Achieves 26 TOPS with just 5W typical power, breaking thermal constraints for high-performance AI in compact professional systems.

Hailo's dataflow architecture ensures predictable, real-time inference performance for industrial automation and autonomous systems.

Seamlessly operates with diverse professional host processors (x86/ARM), providing architectural flexibility across product lines.

Full compatibility with leading AI frameworks and a complete SDK streamline model deployment and optimization.

Engineered for harsh environments with a -40°C to 85°C operating range, ideal for automotive and industrial applications.

Geniatech provides technical collaboration and customization services to accelerate your product development and ensure optimal integration.

| Chip | Hailo-8 |

| AI performance | 26 TOPS(INT8) |

| Host Processor | NXP,Renesas,TI,Rockchip,SocioNext,Xilinx |

| AI Model Frameworks Supported | TensorFlow,TensorFlow Lite,Keras,PyTorch&ONNX |

| Memory | Processor integration |

| Host Interface | B2B(4-lane PCIE Gen3) |

| OS Support | Linux, Windows |

| Power Consumption(Typical) | 5W |

| Operating Temperature | -40℃~85℃ |

| Dimensions | 40×55mm |

A: The AIM-H8 is a compact AI accelerator module powered by the Hailo-8 NPU, delivering up to 26 TOPS of AI performance for real-time edge inferencing and low-latency AI computing.

A: It delivers high AI performance (26 TOPS) while consuming low power (around 2.5W TDP), making it ideal for efficient edge AI applications.

A: AIM-H8 supports major AI frameworks like TensorFlow, PyTorch, ONNX, Keras, and TFLite, and is compatible with Linux and Windows hosts.

A: It’s suited for edge AI, real-time inferencing, industrial automation, vision applications, smart cities, robotics, and IOT systems where fast, efficient AI is needed.