Hot Search

Geniatech AI Accelerator Modules offer a cost-effective, flexible, and ready-to-deploy hardware solution for enhancing edge devices with powerful AI capabilities. Available in M.2 and board-to-board connector formats, these modules seamlessly integrate with existing PCs and edge systems to boost deep learning performance. Designed for scalability, they enable real-time, low-latency AI inferencing across a wide range of industrial and IoT applications. With high performance and low power consumption, Geniatech’s AI accelerator cards provide an efficient, scalable solution tailored to the demands of edge AI deployment.

An AI accelerator card or module is a dedicated hardware component built to run AI tasks far more efficiently than a general‑purpose CPU. It is typically based on a dedicated ASIC (Application-Specific Integrated Circuit), optimized for massively parallel matrix and vector operations used in deep learning.

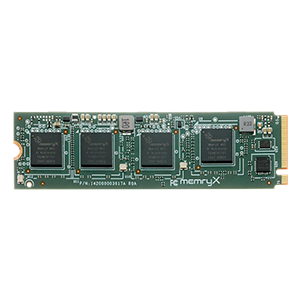

These accelerators are deployed as plug-and-play modules (e.g., M.2 or PCIe cards) or integrated onto custom boards. Working as a co-processor alongside the main CPU, they handle intensive neural network calculations, freeing the CPU for system tasks and greatly improving performance and power efficiency for edge computing.

Exceptional Performance per Watt

AI accelerators use specialized architectures to deliver very high TOPS with only a few watts of power, enabling strong AI performance even in tight thermal and power envelopes.

Rapid Integration and Time-to-Market

With standard interfaces and form factors like PCIe and M.2, accelerators can be plugged into existing x86 or ARM systems, cutting redesign effort and significantly shortening development and launch cycles.

Deterministic, Low-Latency Performance

On-chip memory and streamlined data paths allow accelerators to provide predictable, low-latency inference, meeting strict real-time requirements in robotics, automation, and video analytics.

Optimized Total Cost of Ownership

High compute density, easier integration, and lower power and cooling demands reduce hardware count, engineering effort, and operating costs, improving long-term TCO for large-scale edge deployments.

AI accelerator modules are designed to meet diverse deployment needs. The choice of form factor directly impacts integration complexity, performance scalability, and suitability for the target environment.